When proprietary data access is a bottleneck, enterprise client adoption stalls

Six-month data integrations kill momentum for AI companies scaling LLMs, predictive models, and RAG solutions. EASL handles extraction, validation, and transformation so sources can come online in days, even behind strict firewalls, and engineering teams can focus on algorithms instead of triaging pipelines.

The data friction slowing down every AI/ML team

Engineering teams tied up in extraction, validation, transformation tasks instead of model development

Handling client data securely is a core client concern

Unstructured, inconsistent, or high-frequency sources frequently break simple API based pipelines

Secure or firewalled environments create a roadblock for conventional data ingestion

Inefficient data movement across cloud storage buckets drives up data processing costs

Schema drift and definition changes that leads to manual updates across entire pipelines

Pressure to update models with fresh inputs while onboarding new data at the same time

Where EASL works within the AI and ML ecosystem

Applied AI

Data scientists hired to build predictive models shouldn't spend their days wrangling partner feeds and fixing faulty pipelines. EASL handles the ingestion, normalization, and validation of diverse training datasets, regardless of format, freeing your engineering talent to do what they were hired for.

Onboarding third-party and partner data without pulling ML engineers off core work

Preparing unstructured data for modeling and feature pipelines

Delivering production-ready data into training environments

Stabilizing integrations that collapse whenever partners update schemas or APIs

RAG and Vector Embedding Workflows

Minor data inconsistencies disrupt index creation, degrade retrieval accuracy, and slow model inference. EASL keeps embedding pipelines fed with clean, validated data, even when upstream sources shift.

Preparing documents, historical datasets, and event streams for vector embedding

Managing schema volatility during ingestion for vector-store pipelines

Ensuring consistent, queryable truth records for auditability and regulatory review

Automating reconciliation when upstream structures or client logic change

Secure Data Environments

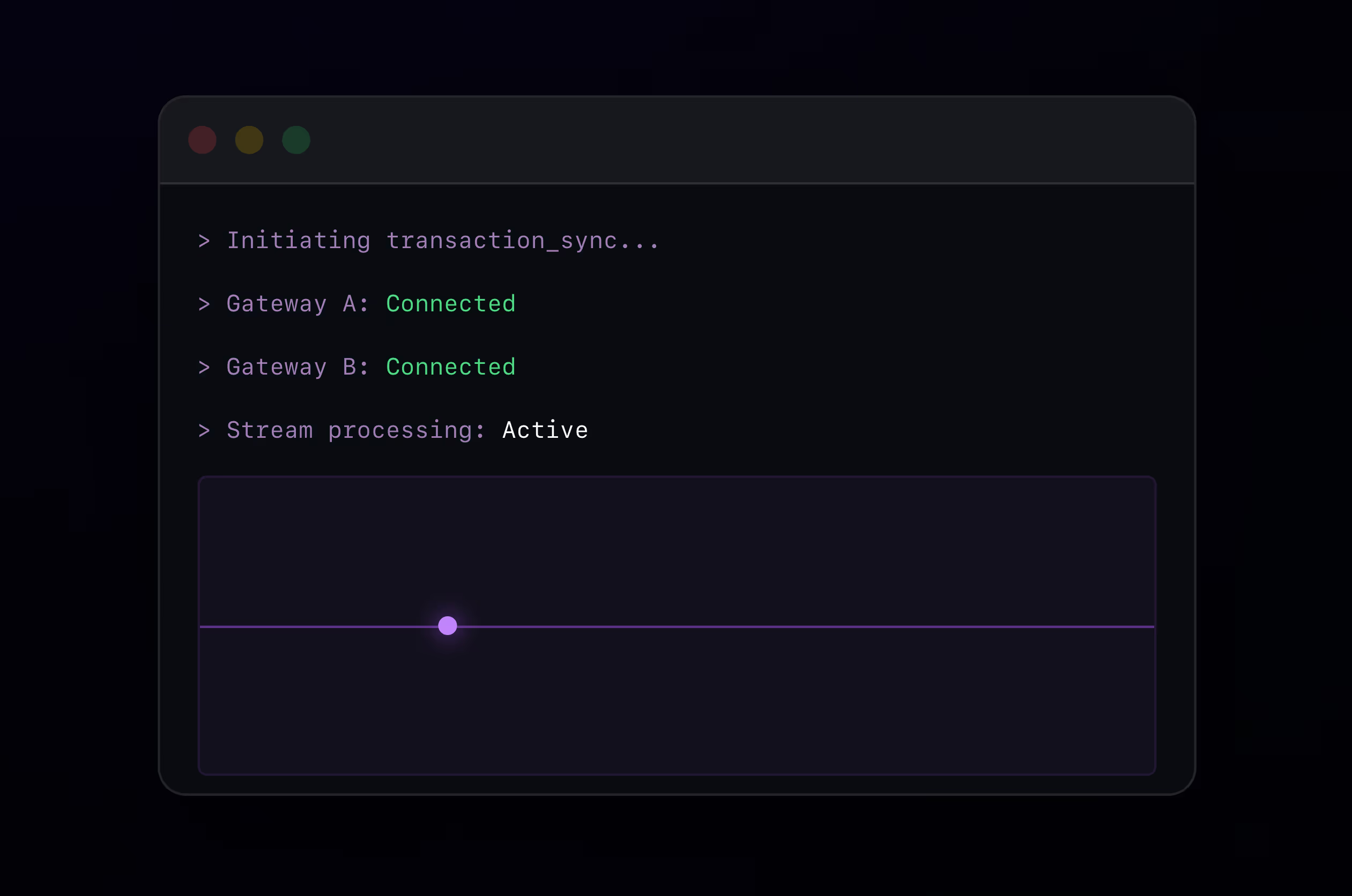

AI companies increasingly need to process sensitive client data under strict security constraints. EASL operates behind firewalls and inside customer-controlled environments, enabling bring-your-own-data workflows without compromising compliance.

Deploying containerized fetch engines inside customer network

Automating validation within secure enclaves

Reducing cloud-egress and S3-copy costs by limiting unnecessary data movement

Handling PII-sensitive workloads with full end-to-end encryption and SOC 2 Type II controls

How EASL rewrites the rules for AI and ML data movement

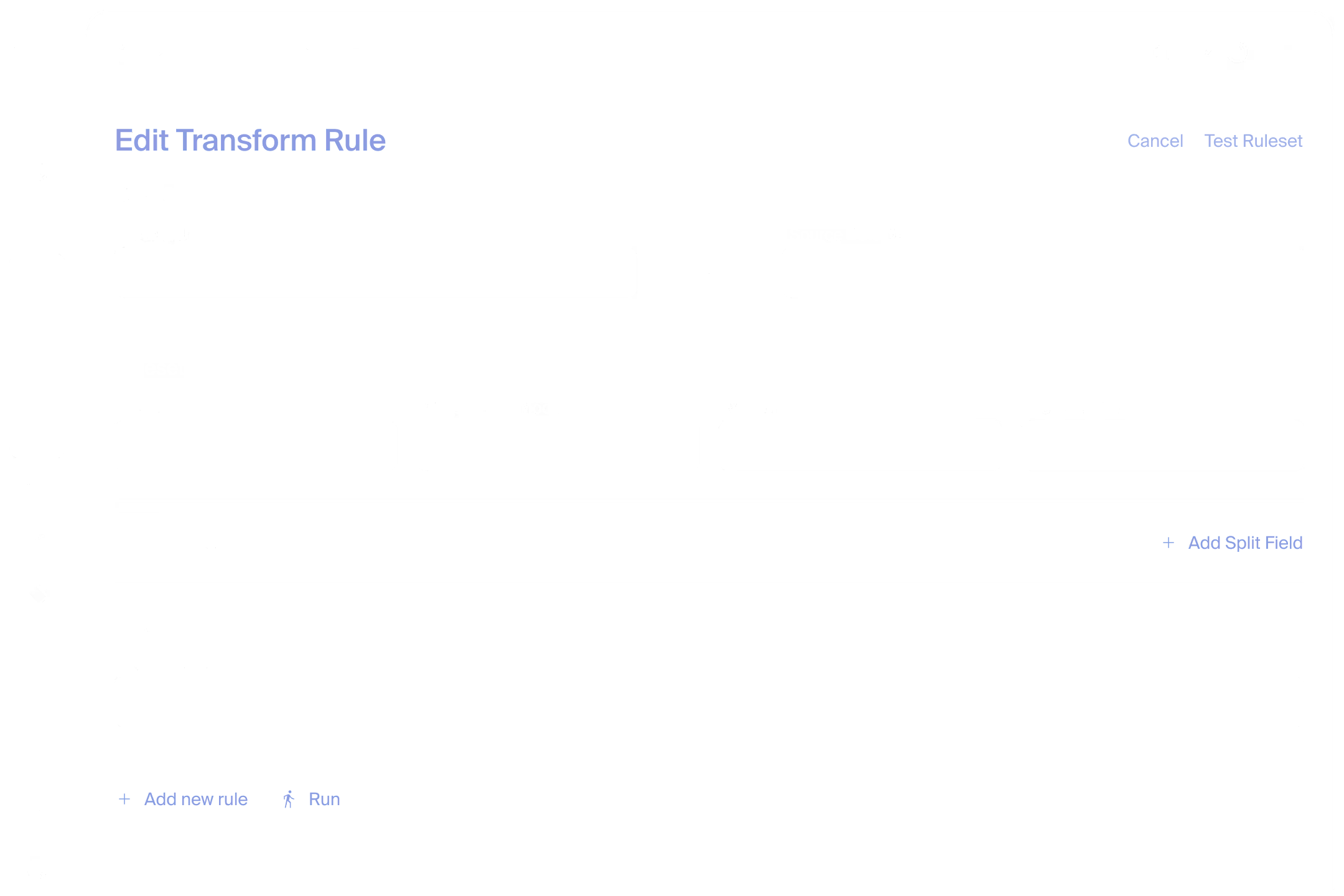

AI teams deal with drifting formats, changing partner feeds, and security constraints that limit data movement Unlike conventional ETL, EASL's DataDevOps approach absorbs that volatility and keeps data flows stable without constant rebuilds.

Adaptive pipelines

Pipelines that adjust automatically when sources, schemas, or definitions change

Validation in motion

With automated error detection and resolution, cutting reconciliation time from days to minutes

Parallel onboarding

Onboard multiple client datasets in parallel to eliminate integration backlogs

Complete lineage and auditability

For confident regulatory and internal reporting

Deployment flexibility

Built on Kubernetes for scalable, portable, behind-firewall or cloud use

Reduced cloud-processing and egress costs

Efficient data-movement flows

The moment an AI company stopped losing time to bad pipelines

The challenge

Ten-source onboarding backlog

Each integration historically required 4–6 months of engineering time

Data scientists spent most of their time on extraction and cleansing instead of modeling

Revenue stuck behind unfinished data integrations

What EASL delivered

Deploying containerized fetch engines inside customer network

Automating validation within secure enclaves

Reducing cloud-egress and S3-copy costs by limiting unnecessary data movement

Handling PII-sensitive workloads with full end-to-end encryption and SOC 2 Type II controls

Business impact

Years of sequential work compressed into a single quarter

Avoided costly internal tooling and saved significant CapEx

Deals finally went live, unlocking millions in same-year revenue recognition

See more real-world results in our case study library.

AI Data Movement FAQs

How does EASL address AI companies’ critical data needs and deliver better outcomes?

EASL delivers an adaptive data-movement platform that handles ingestion, validation, transformation, error resolution, and publishing across unstructured, proprietary, and high-frequency data sources. AI teams get clean, consistent, ready-to-use data without spending months on integrations.

Can EASL work inside secure or firewalled environments?

Yes. EASL supports behind-firewall, containerized, or air-gapped deployments. Data never leaves the client’s environment unless explicitly configured to do so.

How fast can EASL onboard new data sources?

Complex integrations that previously required several months are reduced to days. Automated validation and schema-aware transformation further shorten timelines.

Can EASL integrate with our existing ETL and data-warehouse tools?

Absolutely. EASL runs alongside your current infrastructure, fetching from any source and publishing to any destination. It eliminates manual hand-offs and reduces maintenance across multiple ETL or iPaaS tools.

How does EASL support RAG and vector-embedding pipelines?

EASL maintains consistent data structures and lineage, ensuring embedding pipelines receive stable inputs even when upstream formats change. EASL supports in-transit on-platform vector embedding, so teams can land data sources and their vector embeddings in parallel.

Will EASL replace our existing data tools?

EASL runs alongside your existing ML infrastructure, data platforms, and orchestration tools. It reduces pipeline fragility and minimizes maintenance without disrupting the systems that already work.

What types of AI data can EASL handle?

EASL handles unstructured text, logs, documents, transactional events, historical datasets, partner APIs, user-generated content, and secure customer data—structured, semi-structured, or unstructured—at scale.

Still got questions? Contact us.